Grok faces Irish investigation as Europe scrutinises AI safeguards

Ireland launched a formal investigation into Grok's ability to produce sexually explicit deepfakes, including those of children.

Ireland opened a formal investigation into X’s artificial intelligence chatbot Grok on Tuesday over concerns it may have generated nude deepfakes and AI child sexual abuse material (CSAM).

The Data Protection Commission (DPC) will assess whether Grok’s generative AI tools comply with the bloc’s strict data protection rules.

“The DPC has been engaging with [X’s Irish entity X Internet Unlimited Company] since media reports first emerged a number of weeks ago concerning the alleged ability of X users to prompt the @Grok account on X to generate sexualised images of real people, including children,” deputy commissioner Graham Doyle said in a statement.

The inquiry will focus on core provisions of the General Data Protection Regulation, including whether personal data was processed lawfully and fairly, whether privacy protections were built in by design and by default, and whether the company carried out a required data protection impact assessment before deploying the tool. Regulators are examining whether safeguards were sufficient to prevent the creation of non-consensual intimate imagery.

Grok has around 30 million users globally, according to Business of Apps, and is integrated into X in addition to its standalone platform.

The case feeds into a broader debate about how quickly AI systems are brought to market and whether safety controls keep pace with their capabilities — particularly when minors and sensitive personal data may be involved. Similar allegations about insufficient safeguards have dogged other large AI companies in recent years, including around chatbots that have encouraged self harm and suicide.

The Irish probe follows scrutiny in the UK, where the Information Commissioner’s Office (ICO) has launched formal investigations into XIUC and xAI, the artificial intelligence company behind Grok.

Reports that the chatbot could produce CSAM prompted condemnation from UK officials, with the prime minister signalling that further regulation of chatbots is being considered.

Elon Musk said in January that he was not aware of any naked underage images generated by Grok. “Literally zero. Obviously, Grok does not spontaneously generate images, it does so only according to user requests. When asked to generate images, it will refuse to produce anything illegal, as the operating principle for Grok is to obey the laws of any given country or state,” he said.

“There may be times when adversarial hacking of Grok prompts does something unexpected. If that happens, we fix the bug immediately.”

The ICO additionally said it is examining whether personal data was used lawfully and whether sufficient safeguards were built into the system to prevent the generation of harmful sexualised image and video content.

William Malcolm, the regulator’s executive director of regulatory risk and innovation, said the reports raise “deeply troubling questions” about the use of personal data to create intimate images without consent.

Other European authorities are also taking a closer look at the company. In France, prosecutors in Paris confirmed on Feb. 3 that offices of X were raided by the country’s cybercrime unit as part of an investigation into suspected unlawful data extraction and complicity in the possession of CSAM. Musk dismissed the raid as a “political attack”.

X is separately under investigation by EU authorities in Brussels over compliance with the bloc’s Digital Services Act, which requires major platforms to curb the spread of illegal content.

Spain has also ordered prosecutors to investigate X, along with Meta and TikTok, over the alleged spread of AI-generated abusive content involving minors. Prime Minister Pedro Sánchez warned that such material threatens children’s mental health, dignity and rights. Spain is weighing additional online child protection measures, including a proposal to bar social media access for users under 16.

Meanwhile in the U.S., three lawmakers also called for a Department of Justice investigation after a 24-hour review found Grok generated roughly 6,700 sexually explicit or suggestive images per hour on X between Jan. 5 and Jan. 6.

By comparison, the five most prominent AI “undressing” websites averaged 79 such images per hour during the same period, according to the analysis cited by lawmakers.

Scamurai attempted to generated nude deepfakes using several prompts on Grok but was unable to do so. It was able to create sexually suggestive images of celebrities like Taylor Swift, though none with full nudity.

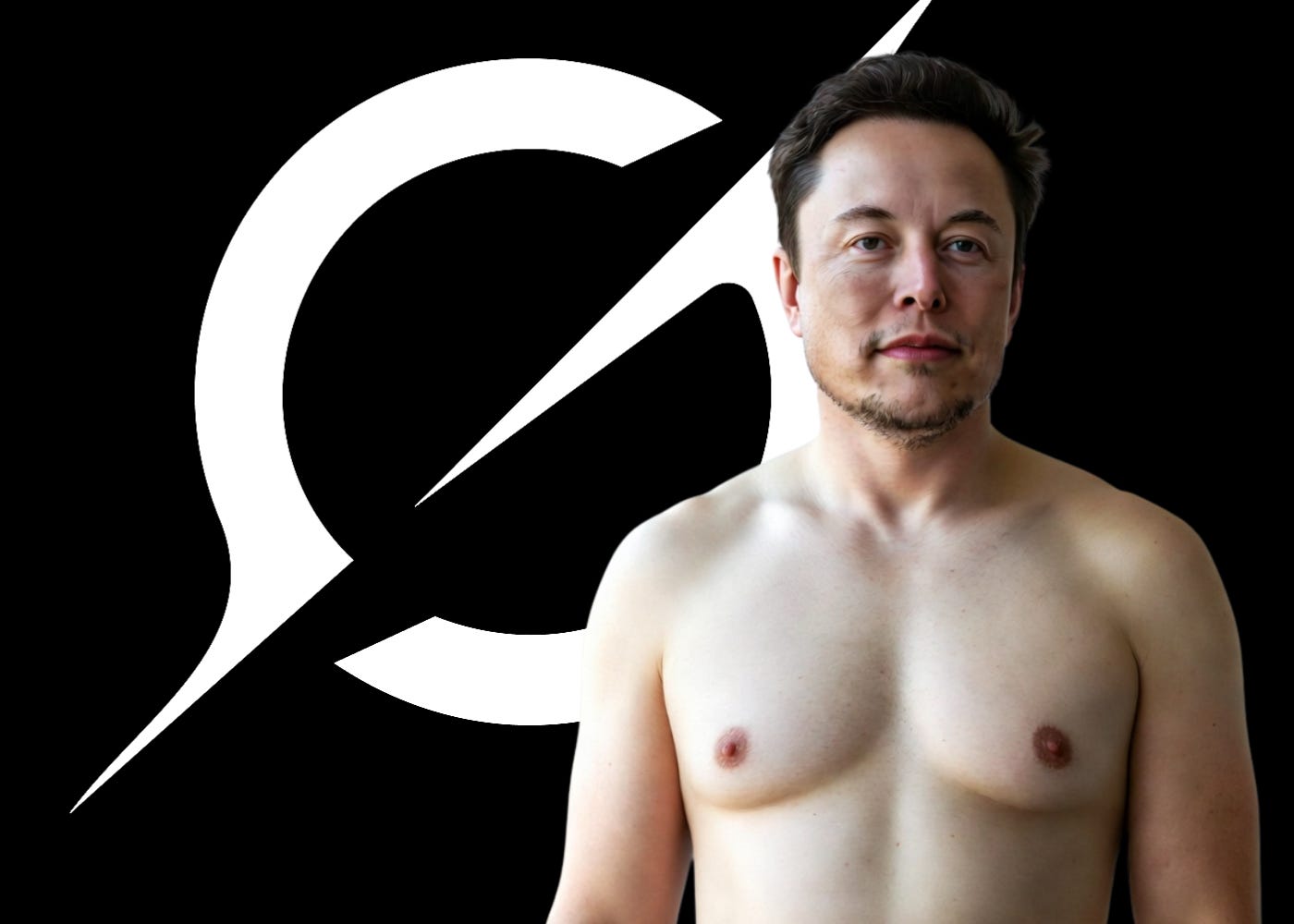

It was also able to produce a topless picture of Musk, and when requests for further nudification were denied, of its own accord Grok suggested some alternative tools that wouldn’t moderate such content.

Get the weekly newsletter direct to your inbox by subscribing.